SIZE

What Resolution is 35mm Film?

This is a somewhat controversial question, and there are many possible answers. Film is an analog medium, so it doesn't have pixels per se, though film scanners have pixels and a specific resolution. The argument rages within the industry how much is enough to capture the full dynamic range within a 35mm negative to work without compromise? The very fluffy and somewhat evasive answer is that there are in excess of 25 million pixels in a top quality 35mm image, which equates to around 6K, or 6144 x 4668 pixels. Most scanners will scan up to this resolution and they make a perfectly valid argument as to why you should work in such high resolutions but the point is the higher the resolution the harder it is to work.

If you are trying to view a 6K image with a 2K output, the 6K image either only has a portion displayed or needs sampling to display the entire frame. So 2 pixels out of every three may be dropped for viewing purposes, is this not defeating the object of such a huge scan?

2K/4K Standard

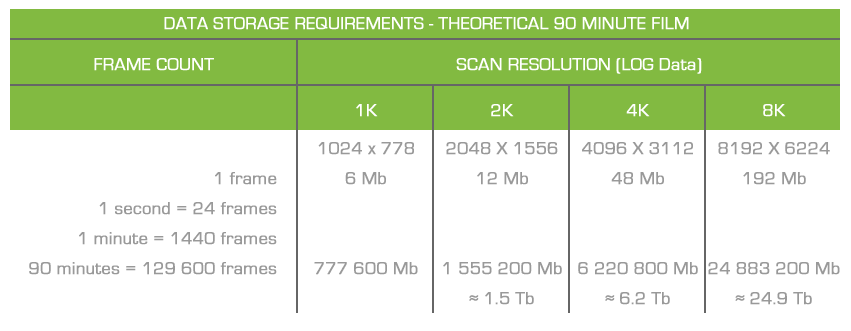

From a standard 35mm film scan it has generally been the workflow that DI work is carried out at 2K or 2048x1556/1536 utilizing 10 bit cineon log space. This is accepted as having enough dynamic range and resolution to produce a very good working print for cinema release. Stepping away from the creative and quality argument for a moment but in essence size used to costs. As the resolution increases so does, storage, bandwidth and ultimately time spent on each frame. It takes longer to scan, longer to dust bust, more disk space is needed, faster machines etc the timescale's are exponential.

To hold a theorised 90 minute 2K feature, you need in excess of 1.5 Terabytes of disk space. This is only the raw scans, without a single effect or frame altered after rendering. For example if you dust bust and clean every frame, for safety that will require another 1.5Tb of space and then when you finally grade the material and output a rendered version with all adjustments, to be recorded back to negative, that will also require a further 1.5Tb of space.

For one theoretical 2K film project you will need in excess of 4.5Tb in house offline storage. How many other projects will be working in house at the same time? To be cost effective, the facility would need to be scanning the next project while the current film is being output to negative and removed from the system. Preferably backed up, in case of a future problem. A basic theorised 2K LOG workflow, for a facility, I would suggest you need minimum disk storage to hold three films, or approximately 15 Terabytes of storage. Now expand that exponentially moving for ward to 4K and then 8K.

If the same project was carried out at 4K we would need in excess of 18Tb of storage. If we had multiple projects then we would need 60 Terabytes of available space, the data becomes mind bogglingly complex. Have you ever wondered why the term Data Wrangler appeared in the film credits of recent feature films?

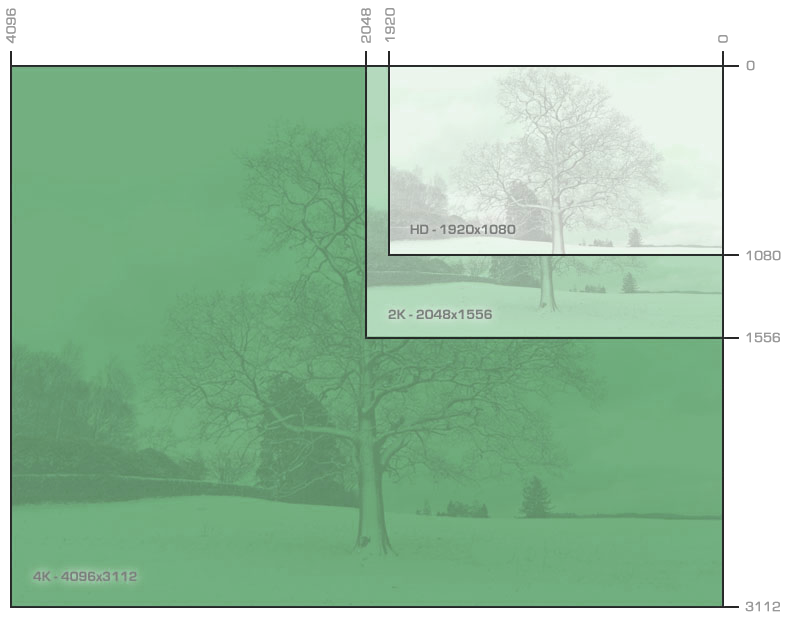

High Definition: If we examine just the image size of the frame, High Definition is very close to the resolution of 1:85 after the aspect ratio is taken in to consideration, as you can see from the following representations/comparisons.

Many television features are being shot on HD and after post production the High Definition is being blown up to fit standard projection aspects for cinema release. This is either achieved during the post production stages or can be achieved using the Arri laser when recorded to film in its proposed output. This technique is possibly better as the post house will save valuable disk space as an uncompressed HD frame is only around 9Mb a frame.

It is undoubtedly a cheaper medium and has less restrictions as tapes can hold a lot more footage than a camera negative, plus extortionate lab costs are not needed and ultimately you know you have captured exactly what you need as the tapes are reviewable straight away - HD certainly is very attractive. If it is shot with a film grade in mind, it certainly can look fantastic - films recently certainly have proved HD is a growing medium.

The main problem ultimately to bare in mind is that High Definition is a Linear format and Film is logarithmic - if you recorded the HD data directly to film, or by simply viewing the HD data with a film LUT applied. Therefore a grade or a LUT must be burnt into the data for output to film. There are standard High Definition linear to logarithmic cubes that can be applied to HD data to output directly to film, this with the blow up from source, would be a cheap(er) solution for output to film.

Rick McCallum, a producer on Attack of the Clones commented the production spent $16,000 for 220 hours of digital tape. Where a comparable amount of film would have cost approximately $1.8 million. With newer disk based systems such as the RED cameras the cost is even lower, plus exact backups can be stored at different locations on different media (for safety) without degenerative loss.

However, this does not necessarily indicate the actual cost savings percentage, as the very low incremental cost of shooting additional footage may encourage filmmakers to use far higher shooting ratios with digital. Lower shooting ratios ultimately will save time in the editing suite with less material to view but with the extra footage and flexibility, a production may capture monumental or pivotal shots which otherwise may have been missed.

Interestingly at the 2009 Academy Award best cinematography was awarded for a film mainly shot digitally, Slumdog Millionaire. Another nominee, The Curious Case of Benjamin Button was also shot digitally. James Cameron's Avatar (the highest grossing movie ever) was shot for 3D utilising the Fusion Camera System, which used two Sony HD cameras for the left and right eye, (pioneered by PACE).

Super 2K: Something banded around for a few years was super 2K (now probably super 4K) obviously promoted and offered at a premium. Is it better than 2K? Technically, well yes I guess it is slightly better than standard 2K but it still is 2K. The technical idea behind super 2K tends to be an over scan, such as at 4K or 6K, then clever algorithms scale the image back down to 2K. The companies offering 'super 2K', their algorithms have as much myth and legend as the secret ingredients in the spicy covering of a KFC piece of chicken.

I donot know for sure but I would say that a lot of the sampling is Nyquist? If you really would like to understand the mathematics behind Nyquist sampling then simply take a look, good luck. If not, believe me when I say it is basically a very clever way of interpolating, or rounding excessive data, such as 3 pixels into one.

Scanning in my humble opinion has come a long way and can offer some pretty incredible images from initial output. For instance Arriscan scans the image twice, what they term as flashing. They flash for a detailed shadow pass and then flash for highlight details. Then the two seperate frames are combined to form a higher dynamic image than a single flash or single scan pass alone can achieve. I am very much in favour of this scanning technique.

4K Comparison: Obviously 4K is being championed because many systems are able to playback the material in real time. Also digital cameras such as RED are 4K. They inherently still have all the same problems associated with digitals linear dynamic range but careful capture can lend itself very well to the digital intermediate workflow. Although as I have said 4K still remains a premium and costly workflow, remember you are paying the hire of not only the system and operator but the background storage and data personnel as well.

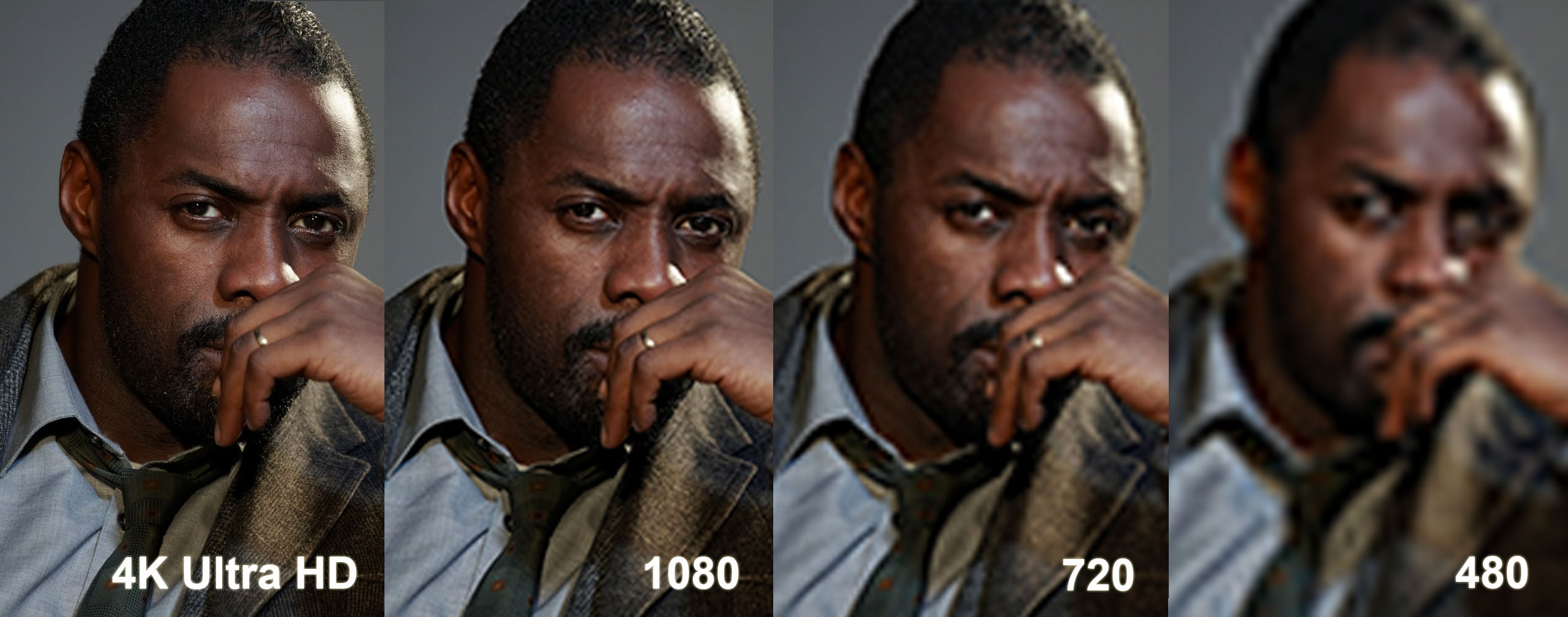

Discussing size and data is difficult - a picture speaks a thousand words.

Hopefully the above comparison will demonstrate the difference in sizing?

Scanning at a higher resolution will give far better clarity and definition in the image. I am sure if we never saw the 4K version we would never question 2K and simply consider it was 'good enough'. I originally suggested that when 4K became the standard format I will be editing this section questioning why we bothered with 2K and at that point 8K become the new '4K'. I believe that conversation is now upon us..

For me size is not always necessarily the better ideal, if the image is linear and the highlights blown then the image is difficult to grade as the detail (data) has gone. Scanned film and LOG data at least gives you some headroom. (Below: Arriscan 2K/4K film comparison)